Improving website performance as a money-saving (and money-making) exercise

By: Philipp Kamps | April 30, 2018 | Business solutions, Productivity tools, and Site performance

I recently did a performance review for a server setup running more than 200 websites. The infrastructure is hosted at Amazon Web Services (AWS). It contains multiple web servers behind multiple Varnish caching servers, uses Relational Database Service (RDS) for database storage, and uses Elastic File System (EFS) for storing assets like content images and documents. There were several areas of performance optimization to be done, which was a good development exercise and resulted in an improved user experience. Most importantly, though, the results also saved bandwidth; reduced the number of servers, number of CPUs, and amount of RAM required; and saved money! A faster site also improves SEO, which will drive more visitors / customers to your site, and will increase conversions.

Service configuration

Optimizing the Varnish (or other reverse proxy setup) configuration can greatly improve performance by limiting the number of requests that hit your content management system (CMS). The CMS requires a lot more resources to produce an uncached page than Varnish does to serve a cached page. Generally, you want to cache everything for as long as possible, and clear the cache as specifically as possible when something has changed. First, you can identify all static content (such as images) and cache them for a long time -- months or even years. Pages that change regularly can have a short cache expiry time to avoid showing outdated content to users. If your CMS is smart enough to send purge requests to Varnish, it will clear cache objects only when needed. If you have user-specific content, even something as simple as a personalized greeting for logged in users, it's more complex to find the right caching strategy, but you can use tactics such as Ajax or Edge Side Includes (ESI) to help.

On the web servers, you should scan the access logs for unexpected URL requests. For example, sometimes website crawlers end up with unwanted HTTP GET parameters or unwanted URL structures. If that's the case, you want to find out why crawlers are accessing these URLs: typically it's because your site code is producing these unexpected variations, and crawlers are just following bad links. In other cases, some nefarious crawlers might be accessing vulnerable URLs known to other CMSs and applications you don't actually have; you can consider blocking certain URL patterns altogether.

If your site is rendered by a PHP application, consider upgrading to PHP 7.x, which can result in some significant performance gains.

Application performance

Caching and optimizing code on the application side is another great way to gain performance. To do so, you'll need to identify pages and code blocks that have long processing times. New Relic, Blackfire.io, and other application profilers are great tools to aid in this task; they illustrate where your application spends the most processing time to build each page, right down to specific functions. You can install them on live servers. They'll point you in the right direction, whether it's a high number of file reads, database or persistent storage issues, or something else.

Once you've identified those expensive (in terms of processing time) parts, you can optimize your code and/or develop caching strategies. For example, you can target specific blocks of code to cache, optimize database query settings, and otherwise refactor code as necessary.

Client side performance

Improving the client side performance -- the time the browser needs to render a page -- not only helps the user experience and SEO, but also with server side performance.

For example, if you introduce "lazy loading" for web page images, the browser only loads an image when it is actually in the browser viewport. Other images -- those you would reach by scrolling down -- do not load right away. The browser is therefore faster at rendering a web page, and on the server side, a web page is not requesting all images to be loaded from the server immediately (and sometimes not at all if the user doesn't scroll all the way down). Fewer requests frees up server resources.

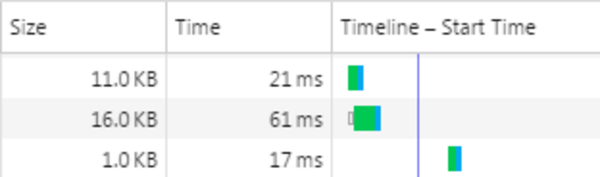

Another example is to combine and minify all of your stylesheet and JavaScript files. Having only a few minified versions for those two types of files increases client side performance and reduces the number and size of server requests.

Application firewall: greedy crawler protection

With AWS, you can use a firewall to protect your server setup, and you can use it to protect your application from a greedy website crawler. In most cases, you want crawlers to index your site, and most crawlers respect the rules you define in a robots.txt file and in meta tags. Sometimes you'll get crawlers that completely ignore your instructions and instead access hundreds of pages per minute. This can be very taxing for your servers, increase bandwidth costs, and sometimes take down your site.

We developed AWS Lambda scripts that scan your web server log files and identify those greedy crawlers. Offending IPs are automatically added to firewall rules to temporarily block access to the site.

Conclusion: increase performance, save money

Increasing your website's performance has many benefits, including boosting your SEO and increasing conversions. This on its own can have good short- and medium-term ROI, but you can achieve some immediate ROI in terms of a reduction in hosting costs!