Extending eZ Find: How to specify minimum relevance values using Solr frange queries

By: Mo Ismailzai | December 8, 2016 | Business solutions, eZ Publish add-ons, and User experience

eZ Find, the enterprise search extension for eZ Publish and wrapper for the Apache Solr search engine, is a highly performant alternative to manually searching through databases. We recently extended eZ Find to support Solr frange queries, and specifically to support minimum relevance values.

eZ Find supports faceted drill-down searches, provides tunable relevancy rankings, automates database indexing and maintenance, and much more. It’s no secret that Mugo loves eZ Find and we’ve also written about hardening eZ Find installations, enhancing eZ Find indices, and getting creative with eZ Find’s built-in boosting directives. However, as versatile as it is, sometimes the built-in functionality is not enough.

One of our clients maintains a large digital archive that allows editors and subscribers to search through articles using keywords. Search results can be sorted by most relevant or most recent, and the results are displayed in descending order.

When results are sorted by relevance, the items at the top of the list are highly related to the search term and those at the bottom have a more tangential connection. In general, this is desirable because it allows every user to decide when the results are no longer useful for their needs.

However, these less-relevant items posed a challenge for our client. When editors sorted by most recent, the list of results was reordered such that recent items were always displayed first, regardless of their relevance. This meant that editors could no longer identify a point where the results stopped being useful because highly related and highly tangential matches could appear anywhere on the list. Editors had to sift through pages of results any time they wanted recently published articles that were highly relevant to a search phrase. Our task was to remove the least relevant results.

The challenge: Effective search result filtering

At first glance, this might sound like a simple task: why not take the list of results provided to us by eZ Find, drop the bottom n%, and display what remains?

Implementation challenges aside, simply dropping results from the bottom of the list fails to maximize on Solr’s sophisticated scoring algorithm and eZ Find’s tunable relevancy ranking. Put simply, sometimes all results could be high-quality matches, and other times, they could all be low-quality ones.

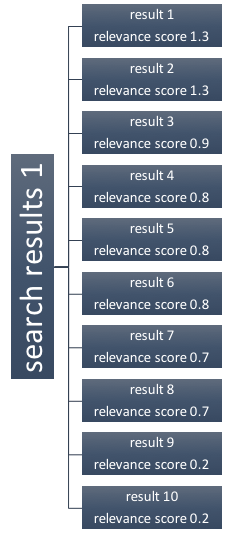

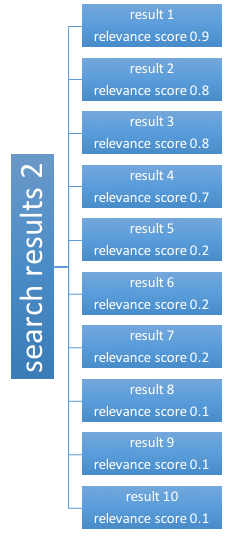

To illustrate, consider the following sets of hypothetical search results:

Relevancy scores range from 0.2 - 1.3 in the first list, 0.1 - 0.9 in the second list, and 0.7 - 2 in the third list. If we drop the bottom 20% (results 9 and 10) from all three lists, we’d get a reasonable outcome in the first list because results 9 and 10 in that list have low relevancy scores. However, in the second list we’d still be left with results 5 through 8 (which also have low relevancy scores), and in the third list we’d lose results 9 and 10 (which have high relevancy scores). Therefore, simply dropping results from the bottom of the list is a suboptimal solution and one we ruled out.

We knew we wanted to limit results by relevance score but eZ Find does not have a built-in filter for this. We considered looping through the entire result set to check relevance scores and drop any below a cut-off value, but this would be inefficient and impractical at any kind of scale. And once the results were extracted from and modified outside of eZ Find, it would no longer be possible to operate on the list using Solr (to re-sort, for instance).

We wanted a better solution.

The solution: Stipulating relevance minimums using frange queries

To solve this problem, we decided to extend eZ Find to support Solr’s powerful frange queries (find the Mugo pull request here). Our approach instructs Solr to disregard documents below a certain score. For instance, the following query instructs Solr to return the 10 most recent documents that are published under node 5555 and that score higher than 0.35 relevance against the search phrase “mugo”:

fetch( 'ezfind', 'search', hash(

'query', 'mugo',

'raw_filter', array( '{!frange l=0.35\}query({!edismax v=$q\})' ),

'subtree_array', array( '5555' ),

'sort_by', hash( 'published', 'desc' ),

'offset', 0,

'limit', 10

))For the three hypothetical lists above, a 0.35 relevance cutoff would drop results 9 and 10 from the first list, results 5 through 10 in the second list, and keep every result in the third list – much better! Moreover, by keeping the solution inside eZ Find, we continue to reap all of its benefits.

A few notes on the "raw_filter" parameter:

- It expects an array and can support multiple filters,

- In the context of the eZ Publish template language, closing }s must be escaped,

- if you’re not using the standard Solr query parser you must specify a parser to use. By default, eZ Find uses the "edismax" query parser, which we’ve specified in the raw filter’s "query()" parameter.

As for what constitutes a good cutoff value, the answer is highly subjective and depends on the documents indexed and the specific use case.

Do you need a custom solution for a unique technical challenge? Contact us any time for a consultation.